Before selecting and working with data, it is always better to get to know it and understand its content. An initial investigation of the dataset is very crucial to conduct before using the dataset in research or further analysis. It can help to detect trends and patterns in data, identify outliers, and find valuable relations among variables. This investigation is called Exploratory Data Analysis (EDA). The EDA applies statistical methods and data visualization tools to support the exploration process. The main goals of the EDA are to deeply understand the content and structure of the data, and to find out if there are any problems in the data.

Knowing Your Data

In this article, the type of the data explored is mostly textual data. I will present an overview for some EDA tools for the Arabic language using Python; such as the number of characters per token and the number of tokens per post, and word cloud graph. Conducting an in-depth investigation support understanding the content of the dataset from multiple dimensions. Some visualization tools are used to better understand the content and context of the data. This article covers the following steps:

- Step 1: importing libraries

- Step 2: reading the dataset

- Step 3: basic filtering and cleaning

- Step 4: tokens frequencies

- Step 5: stop words frequencies

- Step 6: number of tokens per tweet

- Step 7: number of characters per token

- Step 8: word cloud graph

Dataset

Please cite these references if you are using the code discussed in this article for publications and academic purposes:

- Husain, F., & Uzuner, O. (2021). Exploratory Arabic Offensive Language Dataset Analysis. arXiv preprint arXiv:2101.11434.

- Husain, F. (2021). Arabic Offensive Language Detection in Social Media (Doctoral dissertation, George Mason University).

You might also check the above mentioned references to see how I used the same code discussed in this article to study offensive language detection from several datasets. Please feel free to contact me if you would like to get a copy from the references.

Basic EDA Techniques Examples For Arabic text in Python

Step 1: Importing Libraries:

import pandas as pd

import numpy as np

import nltk

import csv

import string

import re

from nltk import word_tokenize

import itertools

import collections

import codecs

import requests

import nltk

nltk.download('punkt')

Step 2: Reading the Dataset:

First I uploaded the dataset to the Colab project, then I fetch the file as the following:

Dataset = pd.read_csv('/content/Machboos.csv', sep=',', encoding='utf-8-sig')

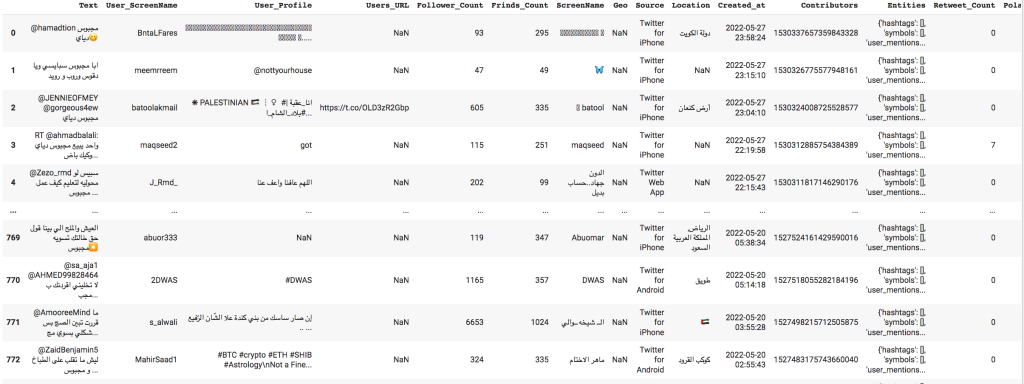

Dataset

Since the exploration focuses only on the tweets, I will extract the tweets and save them into an array to be used on the following steps.

tweets = Dataset.loc[:,"Text"]

tweets

The total number of tweets is 774 tweets before cleaning and filtering.

Step 3: Basic Filtering and Cleaning:

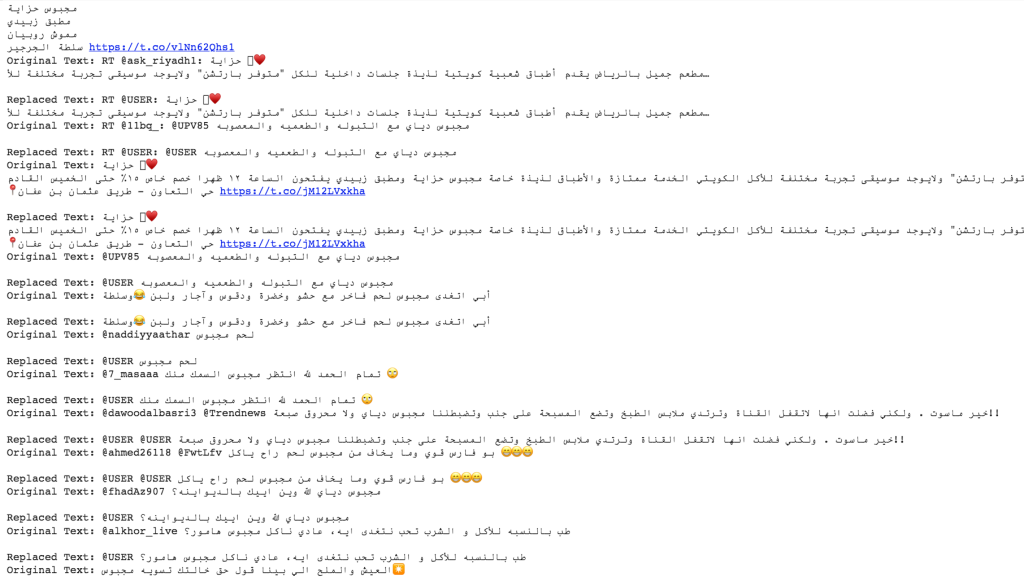

The main goal of this step is to remove any unnecessary content that might affect the results of the analysis or that might cause some violation to user’s privacy. For example, it is recommended to remove usernames from the data to preserve their privacy.

In this script, I first replace username’s mentions with the string/keyword “@USER” to anonymize the data:

# Define an array for the filtered tweets list

filteredTweets = []

for tweet in tweets:

tweet = str(tweet)

# Replace the specific part of the string based on pattern

replacedText = re.sub('@[a-zA-Z0-9_.-]*', '@USER', tweet)

# uncomment the next line if you want to totally remove usermentions

#replacedText = re.sub('@USER:', '', replacedText)

# Print the original tweet

print("Original Text:", tweet)

# Print the replaced tweet

print("\nReplaced Text:", replacedText)

# Adding the replaced tweet to the filtered tweet list

filteredTweets.append(replacedText)

Duplicated tweets might affect the results, so I first remove the retweets keyword “RT” and then remove duplicates.

# removing RT

# Define an array for the tweets without RT character list

filteredCleanedTweet = []

for tweet in filteredTweets:

tweet = str(tweet)

# Replace the specific part of the string based on pattern

replacedText = re.sub('RT', '', tweet)

# Print the original tweet

print("Original Text:", tweet)

# Print the replaced tweet

print("\nReplaced Text:", replacedText)

# Adding the replaced tweet to the filtered tweet list

filteredCleanedTweet.append(replacedText)

Now lets check if we still need to filter some of the content or not.

filteredCleanedTweet

The newline character “\n” needs to be removed too, before removing duplicates.

# removing the newline character "\n"

filteredCleanedLineTweet = []

for tweet in filteredCleanedTweet:

tweet = str(tweet)

# Remove new line character

replacedText = re.sub('\n', ' ', tweet)

# Adding the replaced tweet to the filtered tweet list

filteredCleanedLineTweet.append(replacedText)

filteredCleanedLineTweet

Once we are done with the basic filtering and cleaning, the list of cleaned tweets will formatted back into a Pandas dataframe. A dataframe would make it easier to play around with the data and use multiple tools to explore its content.

# Convert the tweets format to a Pandas dataframe

df = pd.DataFrame({'tweet':filteredCleanedLineTweet})

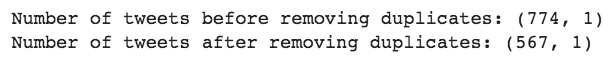

I will check the size of the dataframe first, then I will remove the duplicated tweets and check the size again after to calculate the percentage of duplicated tweets in the dataset.

# Checking the size of the dataframe before removing duplicated tweets

print("Number of tweets before removing duplicates:", df.shape)

# Removing duplicates

df = df.drop_duplicates()

# Checking the size of the dataframe after removing duplicated tweets

print("Number of tweets after removing duplicates:", df.shape)

Thus, about 26.7% of the dataset is duplicated tweets. Only the unique tweets will be used in the following analysis, which are 567 tweets.

Now, I can start with some simple statistical analysis includes finding frequencies of words, frequencies of stop words, statistical measurements for the lengths of the text based on the number of tokens, and statistical measurements for the lengths of the tokens based on the number of characters to analyze their relationships to the dataset content. To extract the most frequently used words accurately, I removed a list of stop words from the text. Stop words could help in defining the context of the posts. I conduct simple frequency analysis to generate the top stop words, as stop words that appear only in a particular context or dataset might be better to consider in analysis as a regular word rather than as a stop word. I investigate the complexity of the text used in the dataset to check if there is any pattern or relationship between the complexity of the text used and the context of the dataset, I used two measures to peruse the goal of this analysis; the number of characters per token and the number of tokens per post. These analysis might be more informative if we are comparing among multiple datasets or among texts from different categories/ labels

Step 4: Tokens Frequencies:

Firstly, the text needs to be tokenized to breakdown all tweets into a single list of tokens.

# text tokenizer function

def my_tokenizer(text):

text = str(text)

return text.split() if text != None else []

# transform list of documents into a single list of tokens

tokens = df.tweet.map(my_tokenizer).sum()

print(tokens)

Now, lets check out the top 20 commonly occurred words.

from collections import Counter

counter = Counter(tokens)

counter.most_common(20)

A very quick check to the results shows that most tweets contain username mentions, which means that most tweets are conversational tweets among users. Punctuations affect the results; the token “@USER” is treated as if it is different than “@USER:”. It would be better to remove punctuations to have better results. Among the top frequently used words are stop words, such as و/and , ما/no. Stop words are the extremely common words in any language that are of little value to the analysis. It is recommended to filter the text from stop words before developing any Natural Language processing System (NLP). After considering all these points, I will check the top 20 tokens again.

To remove punctuations, a customized set of Arabic punctuation is defined as well as available English punctuation lists at the NLTK library are used to filter tweets from all punctuation marks.

# This function removes all punctuations from the tokens

def removingPunctuation(text):

arabicPunctuations = [".","`","؛","<",">","(",")","*","&","^","%","]","[",",","ـ","،","/",":","؟",".","'","{","}","~","|","!","”","…","“","–"] # defining customized punctuation marks

englishPunctuations = string.punctuation # importing English punctuation marks

englishPunctuations = [word.strip() for word in englishPunctuations] # converting the English punctuation from a string to array for processing

punctuationsList = arabicPunctuations + englishPunctuations # creating a list of all punctuation marks

cleanTweet = ''

for i in text:

if i not in arabicPunctuations:

cleanTweet = cleanTweet + '' + i

return cleanTweet

tokenFiltered = []

for i in tokens:

token = removingPunctuation(i)

tokenFiltered.append(token)

Two lists of stop words were used to create an exclusive list. The stop words list available at Nuha Albadi’s github repository and

Mohamed Taher Alrefaie’s github repository are used to further filter out and clean the tokens from unnecessary words.

# This function cleans the tokens from all stop words

def removingStopwords(intweet):

cleanTweet = ''

temptweet = word_tokenize(intweet)

stopword = []

for i in temptweet:

# Stop words can be downloaded from https://github.com/nuhaalbadi/Arabic_hatespeech for list 1 and

# for list 2 from https://github.com/mohataher/arabic-stop-words

path_1 = "/content/stop_words.csv" # List 1

path_2 = "/content/stop-words-list.txt" # List 2

with codecs.open(path_1, "r", encoding="utf-8", errors="ignore") as myfile:

stop_words = myfile.readlines()

stop_words_1 = [word.strip() for word in stop_words]

with codecs.open(path_2, "r", encoding="utf-8", errors="ignore") as myfile:

stop_words = myfile.readlines()

stop_words_2 = [word.strip() for word in stop_words]

stop_words = stop_words_1 + stop_words_2

if i not in stop_words:

cleanTweet = cleanTweet + ' ' + i

return cleanTweet

tokenFilteredCleaned = []

for i in tokenFiltered:

token = removingStopwords(i)

tokenFilteredCleaned.append(token)

You might notice some spaces. So, I will remove the spaces, then I will check the token’s frequencies again, and draw a frequency bar chart for the top 20 tokens.

# This function removes spaces from the tokens

def removingSpace(text):

cleanTweet = ''

temptweet = word_tokenize(text)

for i in temptweet:

#remove more than one space

i = re.sub(r"\s+"," ", i)

cleanTweet = cleanTweet + '' + i

return cleanTweet

tokenFilteredCleanedExtra = []

for i in tokenFilteredCleaned:

token = removingSpace(i)

tokenFilteredCleanedExtra.append(token)

#checking the top tokens again after filtering and cleaning

counter = Counter(tokenFilteredCleanedExtra)

counter.most_common(20)

# This function creates a bar chart of the most frequent 20 tokens

import plotly.offline as po

from plotly.offline import iplot

import plotly.graph_objs as go

freq_df = pd.DataFrame.from_records(counter.most_common(20),

columns=['token', 'count'])

def topTokensBarchart(df):

y = freq_df['count']

data = [go.Bar ( x = freq_df.token, y = y, name = 'Most Common Tokens in the Dataset')]

layout = go.Layout(title = 'Most Common Tokens in the Dataset', xaxis_title="Tokens",

yaxis_title="Counts")

fig = go.Figure(data = data, layout = layout)

po.plot(fig)

# the graph will be find in html format in the temp folder

fig.show()

topTokensBarchart(freq_df)

Results also show some unresolved tokens, which need customized preprocessing and cleaning. I will write a specific post to address these issues related to preprocessing Arabic text from user-generated content. For the purpose of this data exploration exercise, I will continue the analysis using this token list.

Step 5: Stop Words Frequencies:

The following bar chart plot the most commonly used stop words. During this step also I used the same two stop words list I used during the previous step to maintain consistency among the analysis’ steps.

# This function create a barchart for the most frequently used stop words

def stopwordsBarchart(text):

# Stop words can be downloaded from https://github.com/nuhaalbadi/Arabic_hatespeech for list 1 and

# for list 2 from https://github.com/mohataher/arabic-stop-words

path_1 = "/content/stop_words.csv" # List 1

path_2 = "/content/stop-words-list.txt" # List 2

with codecs.open(path_1, "r", encoding="utf-8", errors="ignore") as myfile:

stop_words = myfile.readlines()

stop_words_1 = [word.strip() for word in stop_words]

with codecs.open(path_2, "r", encoding="utf-8", errors="ignore") as myfile:

stop_words = myfile.readlines()

stop_words_2 = [word.strip() for word in stop_words]

stop_words = stop_words_1 + stop_words_2

new= text.str.split()

new=new.values.tolist()

corpus=[word for i in new for word in i]

from collections import defaultdict

dic=defaultdict(int)

for word in corpus:

if word in stop_words:

dic[word]+=1

top=sorted(dic.items(), key=lambda x:x[1],reverse=True)[:10]

x,y=zip(*top)

plt.bar(x,y)

data = [go.Bar ( x = x, y = y, name = 'Most Common Stop Words in the Dataset')]

layout = go.Layout(title = 'Most Common Stop Words in the Dataset', xaxis_title="Stop Words",

yaxis_title="Counts")

fig = go.Figure(data = data, layout = layout)

po.plot(fig)

fig.show()

stopwordsBarchart(df['tweet'])

Step 6: Number of Tokens per Tweet:

I will calculate the number of token per tweet and the number of character per token as that can give some indications of the complexity of the issue and the tweet. The following histogram plots the number of token per tweet.

# This function draw a histogram for the length of tweets based on the number of tokens per tweet

def tokenPerTweetHistogram(text):

text.str.split().\

map(lambda x: len(x)).\

hist()

tokenPerTweetHistogram(df['tweet'])

As can be seen from the histogram above, most tweets contain less than 10 tokens. The next histogram shows the number of characters per token.

Step 7: Number of Characters per Token:

# This function creates a histogram based on the number of characters per token

def characterPerTokenHistogram(text):

text.str.split().\

apply(lambda x : [len(i) for i in x]). \

map(lambda x: np.mean(x)).\

hist()

characterPerTokenHistogram(df['tweet'])

Step 8: Word Cloud Graph:

Word cloud graph provides a visual representation of the text, which could be very helpful to highlight keywords and most important terms in the textual data.

# this function generate the word cloud

!pip install wordcloud-fa==0.1.4

import matplotlib.pyplot as plt

from wordcloud import WordCloud, STOPWORDS

from wordcloud_fa import WordCloudFa

wodcloud = WordCloudFa(persian_normalize=False)

# handcrafted terms/characters to further filter the text, selected based on a manual inspection of the data

stop = ['@USER:','@USER','.', ':', '?', '؟','...', '..', '"','!!','']

# words that has "ال" as a main part from them

no_prefix = ['الله', 'اللهم']

tokens_clean = []

for word in tokenFilteredCleanedExtra:

if (word not in stop):

if word.startswith('ال'):

if (word not in no_prefix):

word = word[2:] # to remove the prefix "ال"

tokens_clean.append(word)

#printing the list of tokens

print(tokens_clean)

# generating the word cloud graph

# Need to get downloaded because it will appear in the online temp folder

data = str(tokens_clean)

wc = wodcloud.generate(data)

image = wc.to_image()

image.show()

image.save('wordcloud.png')

image.show()

In addition to the techniques discussed in this article, there are many other possible ways to analyze the textual data. For example, investigating the use of hashtags in tweets, use of emojis, or if possible frequencies of different part of speech tags (nouns, verbs etc.).

In this article, I have presented 8 basic steps for EDA that support Arabic text. I hope you find them valuable and informative. Please let me know if you have any comments. You can find the full code on this Google Colab project or on my GitHub.